Introduction

In this blog post, we'll explore how to automate the installation of Docker and Docker Compose on AWS EC2 instances using Terraform. By automating this process, we can easily set up a Docker Swarm cluster with minimal manual intervention.

Prerequisites:

Before we begin, make sure you have the following:

AWS account with appropriate permissions

Terraform installed on your local machine

Access to an SSH key pair for connecting to EC2 instances

Creating a Default VPC

The default VPC in AWS provides an easy way to get started with EC2 instances and other AWS resources. We'll create a default VPC with a user-defined name for better organization. This file is (security-groups.tf)

resource "aws_default_vpc" "default_vpc" {

tags = {

Name = "default vpc"

}

}

Defining Security Group Rules

Next, we'll define a security group named "sgswarm" to control traffic to the Docker Swarm nodes. This security group will allow inbound traffic on all TCP ports and ICMP (ping) requests from any IP address.

resource "aws_security_group" "sgswarm" {

name = "sgswarm"

vpc_id = aws_default_vpc.default_vpc.id

tags = {

Name = "allow_tls"

}

# Allow all inbound TCP traffic

ingress {

from_port = 0

to_port = 65535

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# Allow all outbound TCP traffic

egress {

from_port = 0

to_port = 65535

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# Allow ICMP (ping) requests

ingress {

from_port = -1

to_port = -1

protocol = "icmp"

cidr_blocks = ["0.0.0.0/0"]

}

}

Understanding Terraform Variables

Terraform variables allow us to parameterize our infrastructure code, making it more flexible and reusable. We can define variables with default values and descriptions to provide context for their usage.

variable "aws_region" {

description = "AWS region on which we will setup the swarm cluster"

default = "ap-south-1"

}

variable "ami" {

description = "Amazon Linux AMI"

default = "ami-007020fd9c84e18c7"

}

variable "instance_type" {

description = "Instance type"

default = "t2.micro"

}

variable "key_path" {

description = "SSH Public Key path"

default = "/Project_Mario_game.pem"

}

variable "key_name" {

description = "Desired name of Keypair..."

default = "Project_Mario_game"

}

variable "bootstrap_path" {

description = "Script to install Docker Engine"

default = "./install_docker_machine_compose.sh"

}

Explanation:

aws_region: Specifies the AWS region where the Docker Swarm cluster will be set up. The default value is "ap-south-1", but you can modify it according to your preferred region.ami: Defines the Amazon Machine Image (AMI) to use for the EC2 instances in the Swarm cluster. The default value points to an Amazon Linux AMI.instance_type: Specifies the type of EC2 instance to use. The default value is "t2.micro", which is suitable for testing and small workloads.key_path: Specifies the path to the SSH public key file for accessing the EC2 instances. The default value points to a PEM file named "Project_Mario_game.pem".key_name: Defines the desired name for the SSH key pair that will be used to access the EC2 instances. The default value is "Project_Mario_game".bootstrap_path: Specifies the path to the script used to install Docker Engine on the EC2 instances. The default value points to a shell script named "install_docker_machine_compose.sh".

Setting Up Terraform Configuration

First, let's create a Terraform configuration file (main.tf) to define our AWS resources and provisioning steps.

provider "aws" {

region = var.aws_region

access_key = var.access_key

secret_key = var.secret_key

}

# Define variables and resources...

Defining AWS Instances

We'll define three AWS EC2 instances - one master node and two worker nodes - using the aws_instance resource.

resource "aws_instance" "master" {

# Define instance configuration...

}

resource "aws_instance" "worker1" {

# Define instance configuration...

}

resource "aws_instance" "worker2" {

# Define instance configuration...

}

Provisioning Docker and Docker Compose

We'll use the provisioner blocks to execute shell commands on each EC2 instance. First, we'll copy a shell script (install_docker_machine_compose.sh) to the instances, then make it executable and execute it to install Docker and Docker Compose.

resource "aws_instance" "master" {

# Define instance configuration...

provisioner "file" {

source = "./install_docker_machine_compose.sh"

destination = "/home/ubuntu/install_docker_machine_compose.sh"

}

provisioner "remote-exec" {

inline = [

"sudo chmod +x /home/ubuntu/install_docker_machine_compose.sh",

"sudo sh /home/ubuntu/install_docker_machine_compose.sh",

]

}

}

# Similar provisioner blocks for worker instances...

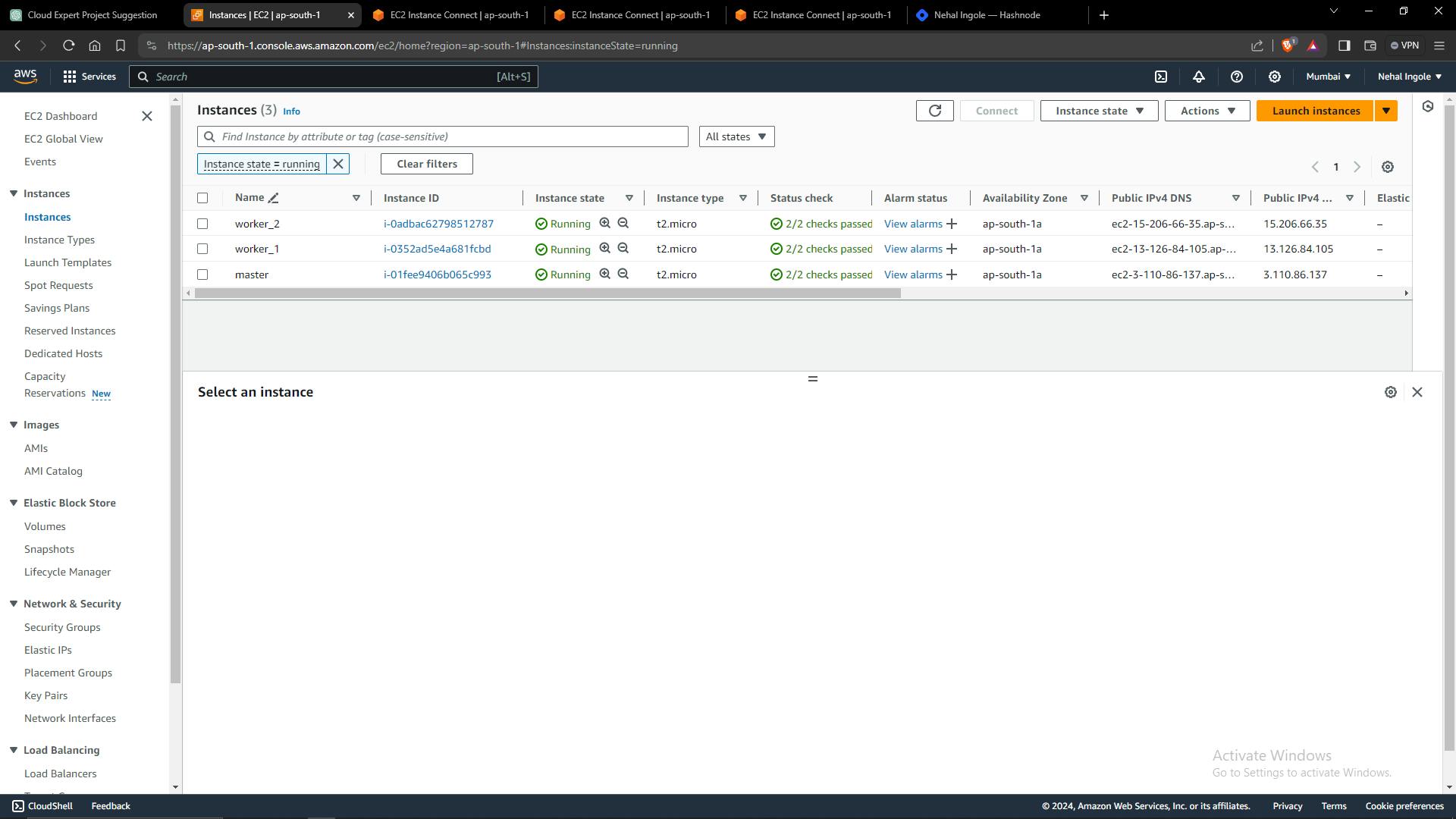

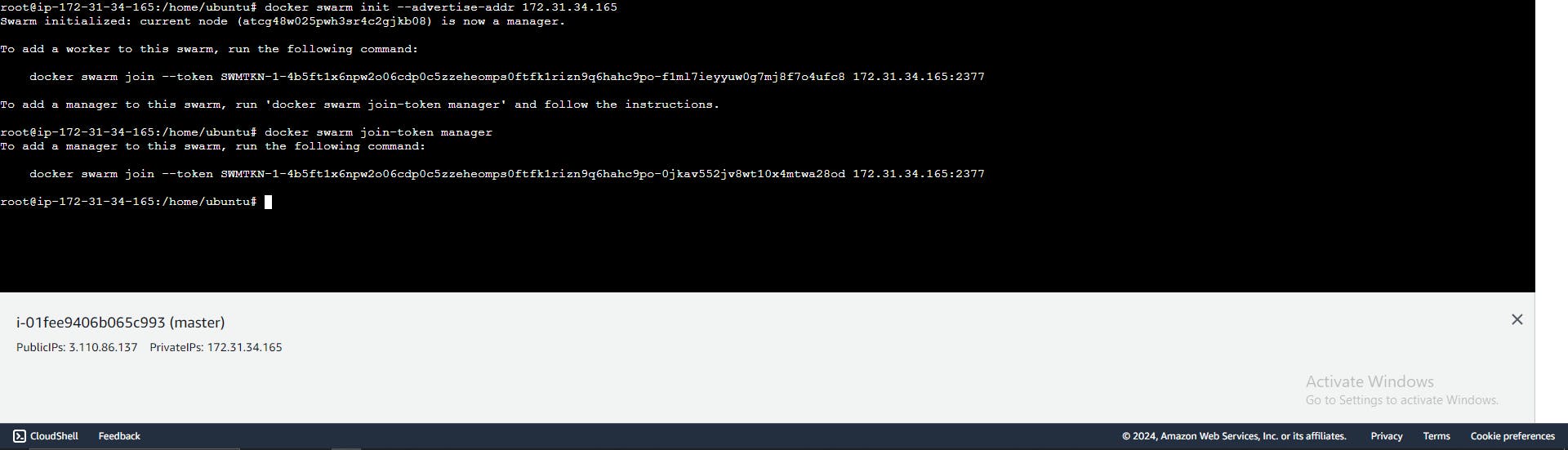

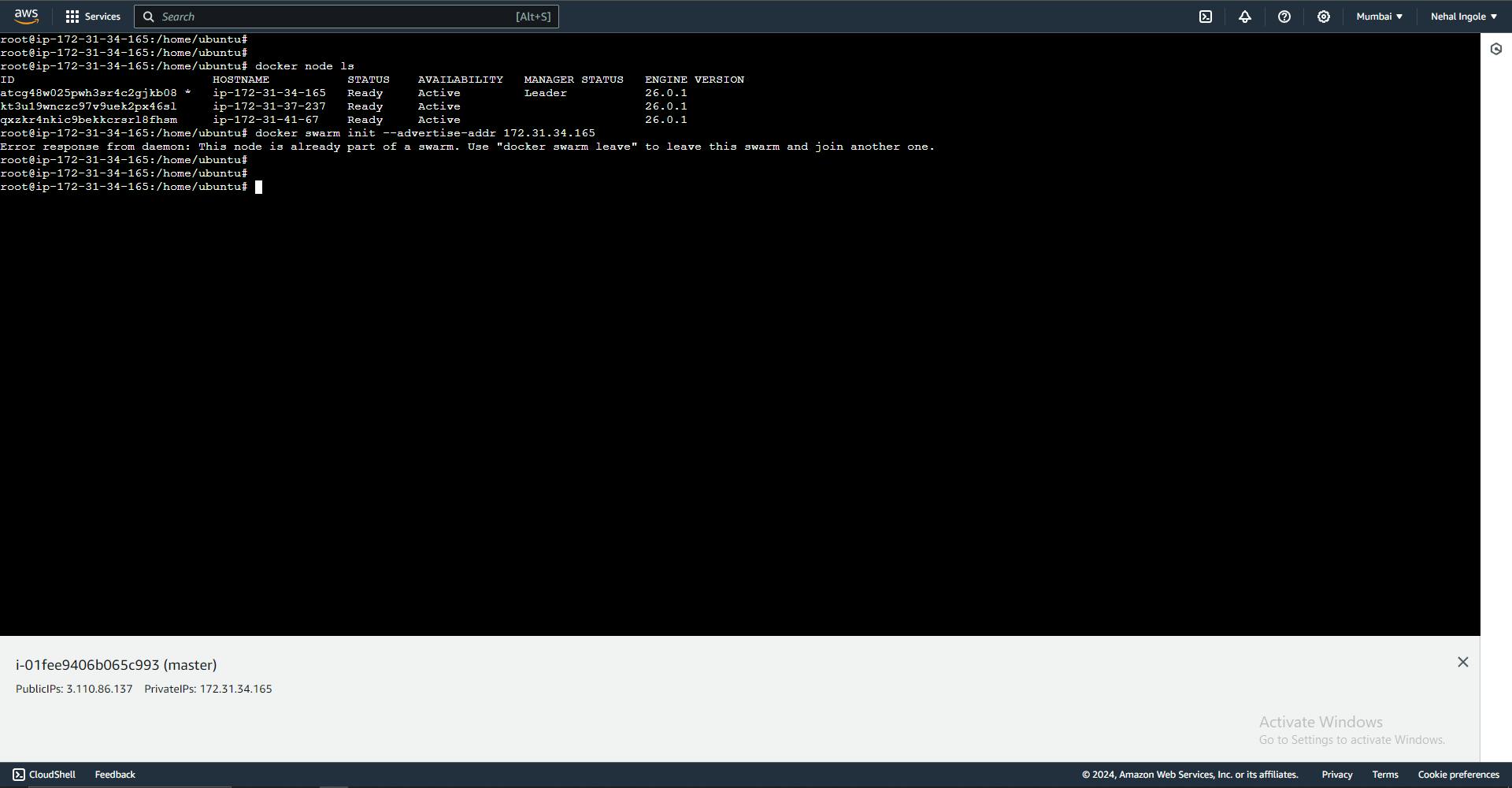

Result

All instance running properly

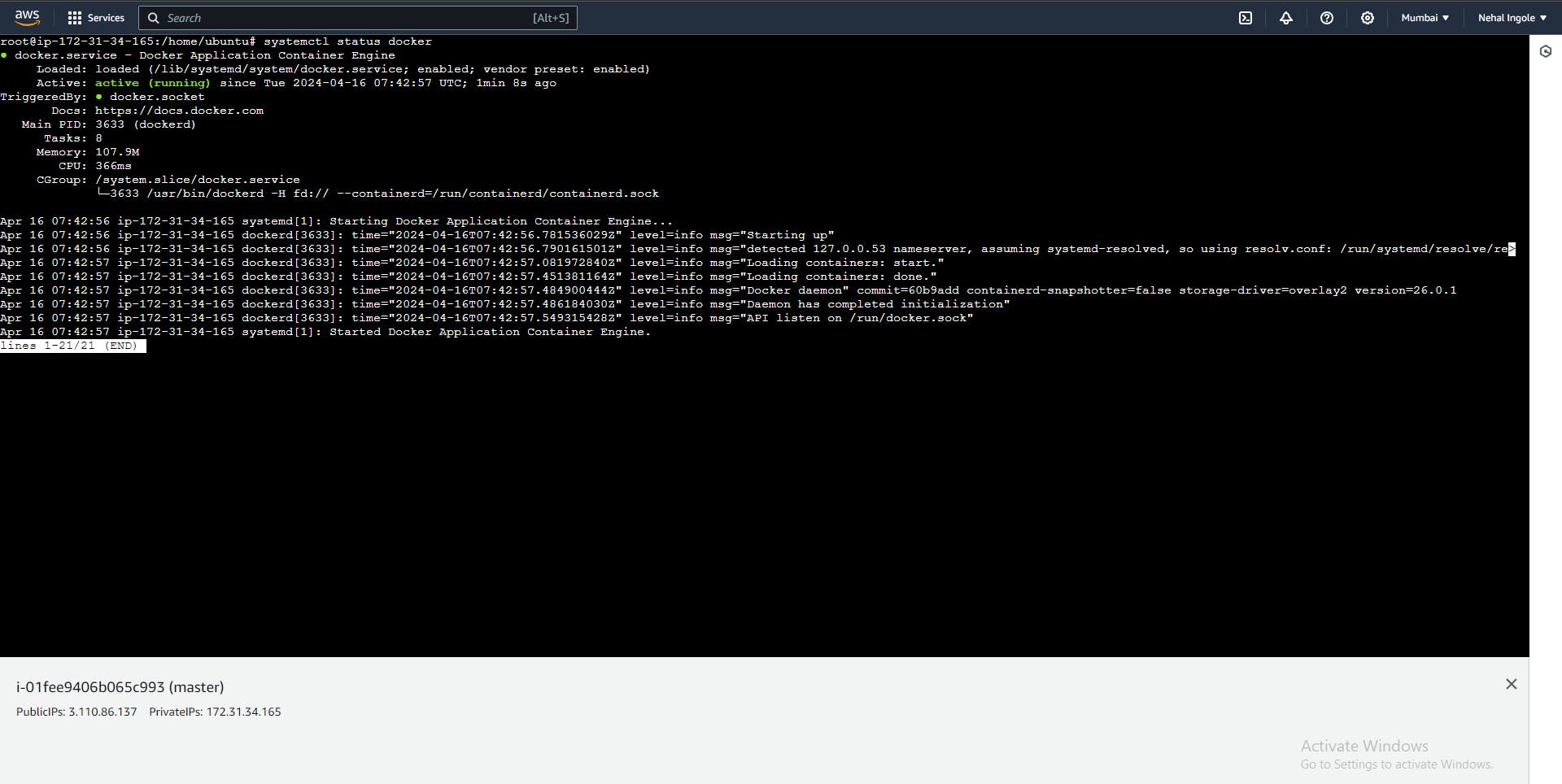

Master node

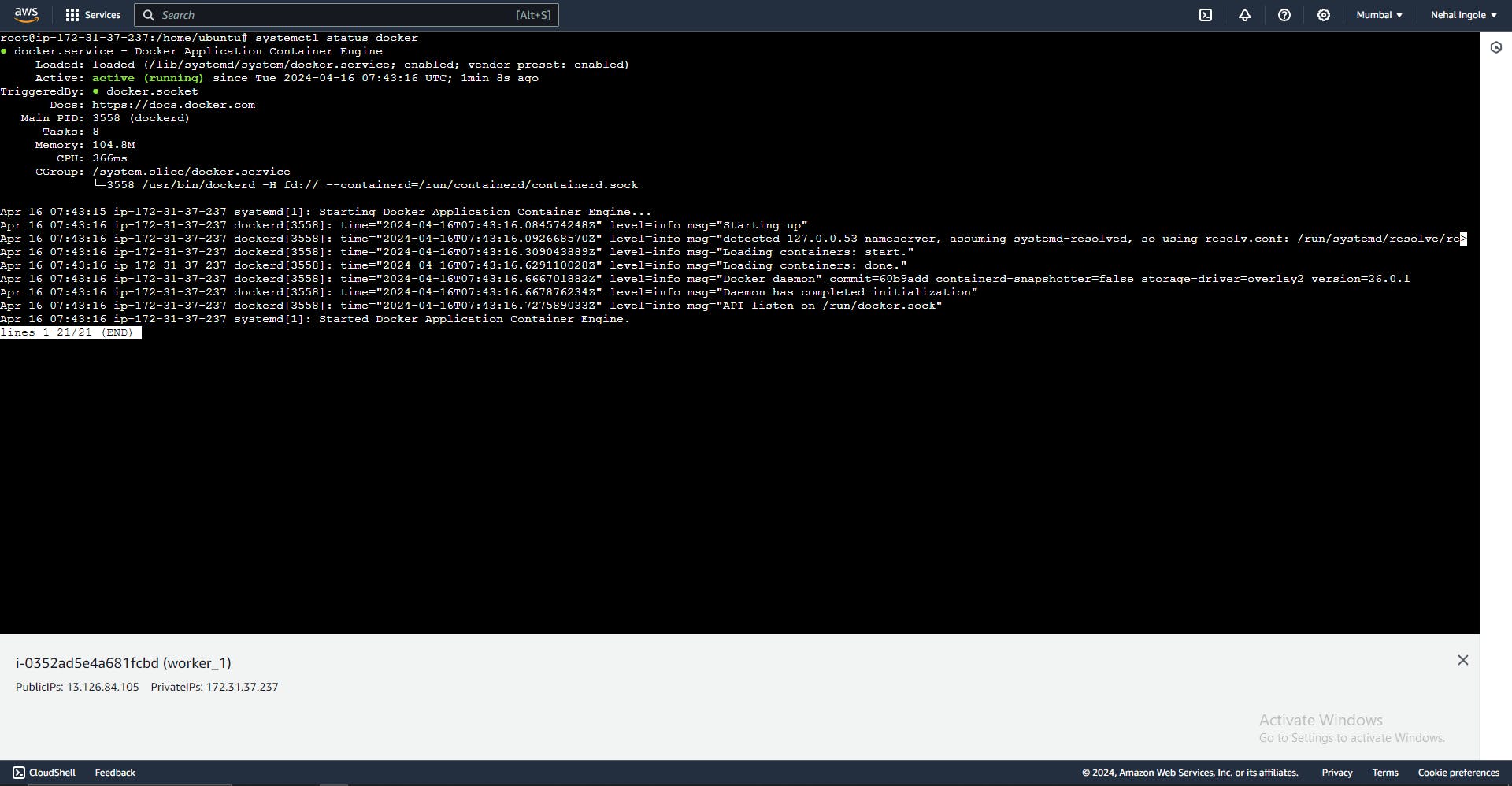

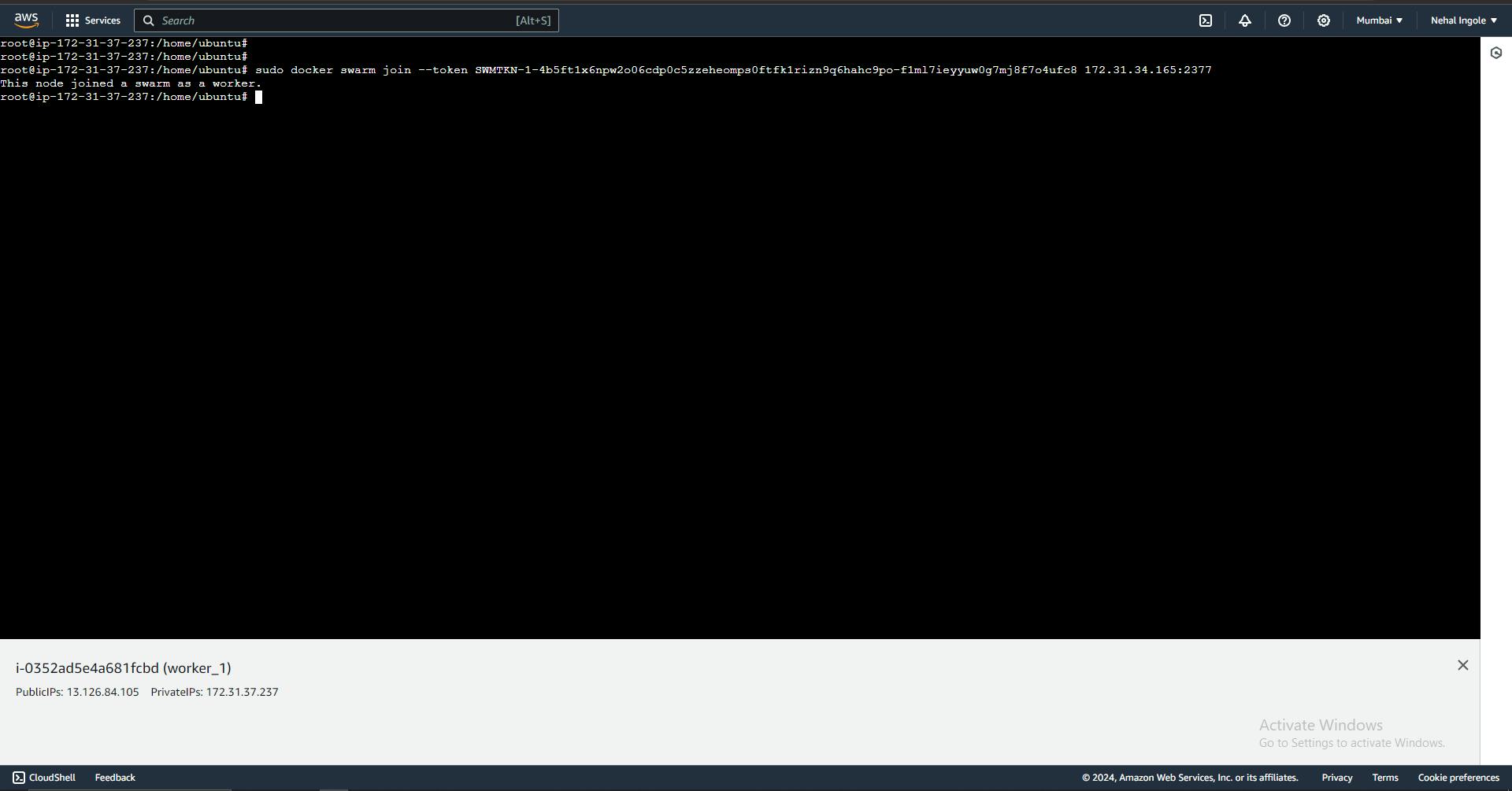

Worker_1

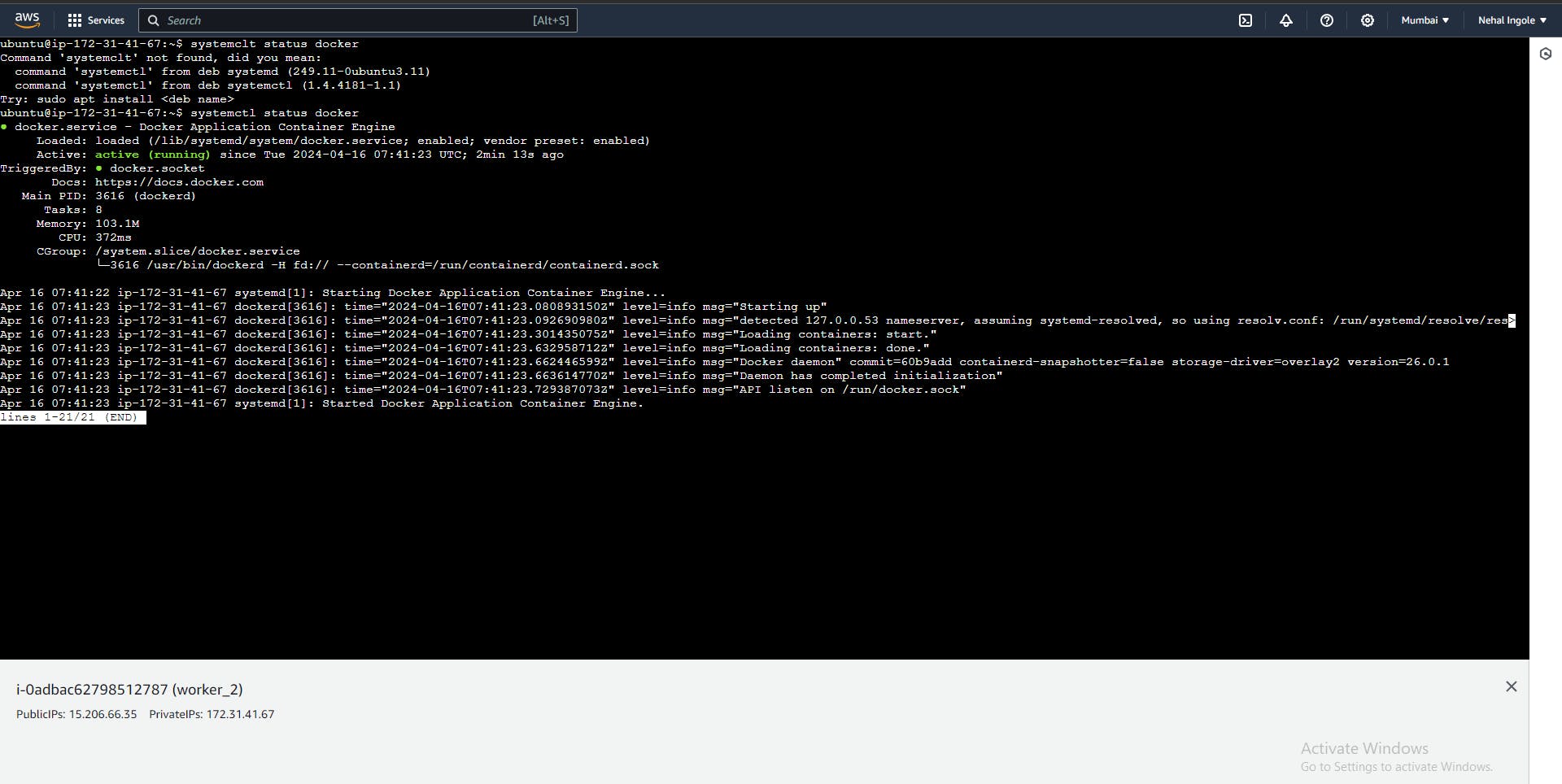

Worker_2

Output of Woker node to connect with Node

All the node connected to Master node properly

Worker_1 connection complete

Worker_2 connection complete

Video:-

Conclusion

By following this tutorial, you've learned how to deploy a multi-node Docker Swarm cluster on AWS using Terraform. Leveraging Terraform's infrastructure as code (IaC) approach and variables, you were able to easily customize key parameters such as the AWS region, instance type, and SSH key pair.

Throughout the tutorial, you gained insights into the following steps:

Defining Terraform variables to parameterize the infrastructure setup.

Creating an AWS default VPC and security group to control inbound and outbound traffic.

Configuring EC2 instances as Swarm nodes and provisioning them with Docker Engine using remote-exec provisioners.

Establishing a Docker Swarm cluster by joining worker nodes to the master node.

With your Docker Swarm cluster up and running, you now have a powerful platform for deploying and managing containerized applications at scale. Additionally, the use of Terraform ensures consistency, repeatability, and ease of management for your infrastructure.

Feel free to further explore Docker Swarm features such as service deployment, scaling, and rolling updates to maximize the benefits of your cluster. Happy containerizing

Github Link :- https://github.com/Ingole712521/terraform-aws-learning/tree/main/Day_3_docker_swarm

Connect with us:

Hashnode: https://hashnode.com/@Nehal71

Twitter : https://twitter.com/IngoleNehal